Build a 48-port switch using a 24-port ASIC

![By LiveWireInnovation (Own work) [CC-BY-SA-3.0 (http://creativecommons.org/licenses/by-sa/3.0)], via Wikimedia Commons](https://thenetworksherpa.com/wp-content/uploads/2013/11/SSDTR-ASIC_technology-300x300.jpg)

- Scorpion: 24 x 10Gbps

- Trident: 48 x 10Gbps

- Trident+: 64 x 10Gbps

- Trident2: 108 x 10Gbps (108 x 10G MACs – can handle 1.2Tbps using some ports at 40G).

Arista and Merchant Silicon

Arista Networks’ strategy is to use best-of-breed merchant-silicon switch ASICS in their switches rather than developing custom ASICS in house. Using merchant silicon solves a raft of problems for Arista. The down side is that Arista is limited by the port-count of the current generation of ASICS. But you don’t stop innovating just because you’re using standardised building blocks. When Arista were developing the 7100 series switches they chose the 24 x 10Gbps Intel Fulcrum FM4000 ‘Bali’ ASIC. (simplified diagram to left).

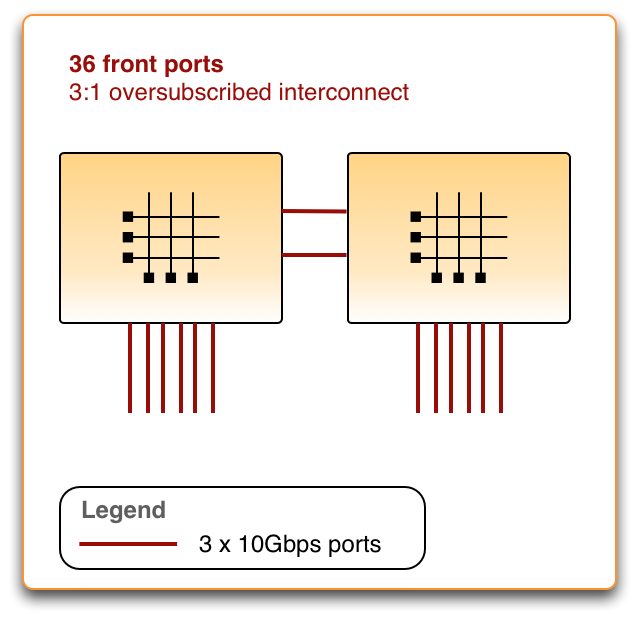

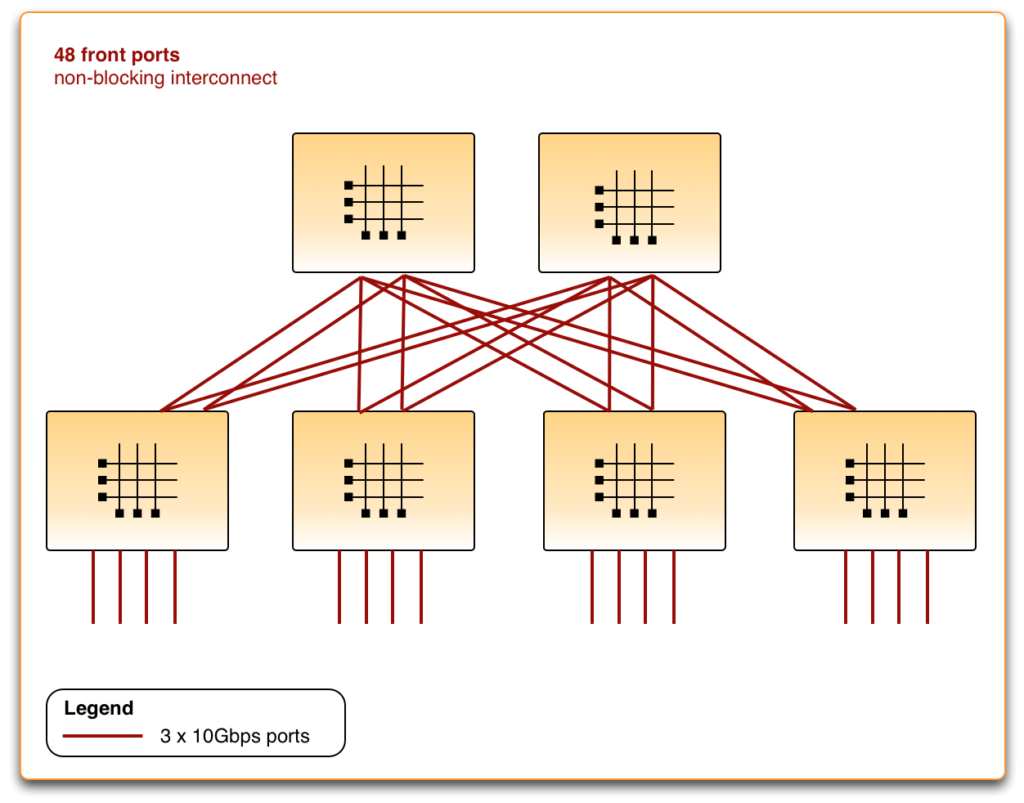

For the 7148SX, Arista needed to build a 48-port non-blocking switch from 24-port ASICs. The obvious choice here is to deploy more 24-port ASICs. So… how many do you need? Deploying two 24-port ASICs does double your total port-count, however you will end up with two logical switches instead of one. To interconnect them you could redeploy some of the front-panel ports as inter-ASIC ports, as per the diagram below.

Okay, that looks a bit better, but it still doesn’t meet requirements. You have only 36 front-panel ports and you need 48. Worse still, you have 3:1 oversubscription on your interconnect. This solution won’t work, so let’s look at the final solution.

Whoah, you need six 24-port ASICs to double the front panel port-count in a non-blocking switch. I was a little surprised that so many ASICs were needed. Note that the architecture has changed too. Arista have used a linecard-and-fabric architecture borrowed from chassis switches, but have collapsed that architecture into a single standalone switch. In order to simply inter-ASIC routing, the front-facing ASICs will most likely add an destination-ASIC tag onto the packet to simplify the fabric-ASIC lookup logic. There are many other multi-ASIC challenges here such as keeping the L3 and L2 tables synced in the front-facing ASICs. Although this seems like overkill for a single-RU box, it is a great strategy if you have fabric programming experience in your software team.

Summary

Arista doesn’t need to use this particular trick any more but I love the approach of innovating beyond fixed constraints. This approach is not unique to Arista. In the the Cisco/Insieme Nexus 9000, they have divided the resources of the line-card and fabric Trident2 ASICs in very different and clever ways to get the most from those small table sizes. We’re going to see a whole lot more of this trend. Innovation doesn’t die in the age of merchant silicon, it just takes new forms.

[1] This assumes perfect traffic distribution and no incast, but is still very different from planned oversubscription.

8 thoughts on “Build a 48-port switch using a 24-port ASIC”

Thanks for this article.

The first article that opened my eyes about switch internals was this one:

http://blog.thelifeofkenneth.com/2013/02/tear-down-of-hp-procurve-2824-ethernet.html

Every time I see an article like this, it does really put things into perspective again.

Wow, that is a fantastic link Lennie!! Thanks for sharing. The internal photos and teardown model is a great idea.

Hi this was a very insightful article. Thanks. Would you be able to speak a bit about the actual physical path or packet flow a packet takes inside the switch itself and how does the hardware forwarding take place within the switch and asic. When does packet get sent to the Asic. Is it happen on ingress or on egress? When does packet get analyzed by CPU or control plane. If the CPU never sees the actual packet how does asic know where to forward the packet and does that mean the packets stay within asic itself and is that what is meant to be hardware forwarding. Is Asic = dataplane. Tx

Hi Ned,

That’s a great question, but is difficult to answer quickly. I’ll see if I can put together a post to reply to your question. Is it okay if I quote your question in a post?

/John Harrington.

Hi John – tx for response. If you feel question I ask is correctly describe behaviour then please use it in post. I am not sure if question I ask is using right words or not. I m just trying to understand the path of packet when inside switch or inside asic and what is it mean to hardware forward vs alternate of cpu forward. If question is not right then pls do correct it with right words. Tx

Hi Ned,

I’ve posted my response..feel free to follow with with any other questions on that post.

https://thenetworksherpa.com/data-control-plane-separation-sortof/

Regards,

John H