Cut-through, corruption and CRC-stomping

Corrupted frames are the devils spawn. A few noisy links causing frame corruption can quickly degrade network performance, and troubleshooting them is getting harder. These integrity errors generally occur when signal noise causes a binary ‘1’ to be mistaken for a binary ‘0’ or vice-versa. This post takes a look at integrity errors and the impacts of corrupted frames in a cut-through switched network. Throughout this post I’ll use the term ‘CRC errors’ term to refer to frame integrity errors which were detected by CRC comparison.

FCS and the CRC

The Frame Check Sequence (FCS) is a 4-byte (32-bit) trailer added to the end of every ethernet frame. The originator of the frame calculates a cyclic redundancy check (CRC) code against the layer-2 frame it is sending, and sends this CRC code as the frame FCS trailer. The receiver verifies the received CRC code against received frame. If there’s a mismatch, the receiver will drop the received frame and increment the ‘CRC Errors’ counter on the receive interface.

Disambiguation

There are other error checking mechanisms in the TCP/IP stack which often get confused with each other. The IP header (just the header, not the payload!) and full TCP segment both use a 16-bit one-complement checksum to detect errors. Although checksums and CRCs fulfill a similar function they are implemented differently and the terms are not interchangable.

The 32-bit CRC used in the layer-2 FCS is a strong error detection mechanism. However It is worth calling out that no mechanism is foolproof, and that errors can also creep in within the switch itself. Check out router freak’s excellent post on error detection.

For the rest of this post I’ll refer exclusively to the layer-2 frame check sequence and the cyclic redundancy check used to implement it.

How CRC works in store and forward mode

In a traditional network which uses store and forward switching, the entire frame will be read into the switch’s buffer before a switching decision is made. In store-and-forward mode the switch can wait for the CRC code in the FCS trailer, and check it against the CRC calculated from the received frame. The switch will either discard the frame (incrementing it’s Rx CRC-error counter for that interface) or forward the frame out an egress interface.

Troubleshooting store-and-forward CRC errors

Thus, troubleshooting CRC errors in a store-and-forward world allows you to make one very important assumption. “Received frames with detected corruption do not not get propagated”.

When a corrupt frame is detected then you can deduce that the corruption was introduced either within the sender switch or on the link between the sender and receiver interfaces. So if you observe CRC-error counters incrementing on a particular link, you know where the problem lies immediately.

You don’t yet know ‘what’ caused the problem but you know approximately ‘where’ the problem is; i.e. at either end of a single physical link. Troubleshooting steps would look loosely like:

- Clear counters and monitor

- Look for other interfaces with CRC’s (multiple ports might indicate a board/fabric problem).

- Checking transceiver light levels on transmit and receiver

- Traffic shift, check fiber connectors, clean fibers, replace transceivers, swap slots etc. etc.

- Verify the ‘Operational’ switching mode. Note that some platforms won’t cut-through unless the frame size exceeds 576 bytes for example. Check your platform-specific behavior.

Cut-through and the corruption propagation pandemic

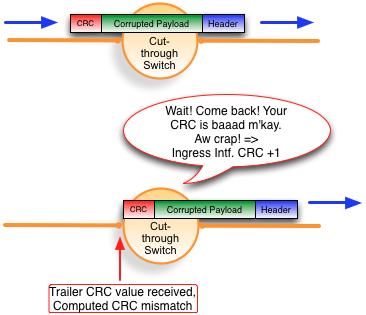

The switch may not be able to prevent the forwarding of corrupt frames when it operates in cut-through switching mode. Remember that cut-through switches will begin forwarding the frame out the egress interface before the full frame is received.

This reduces switching latency but introduces a thorny problem if the received frame was corrupted. By the time the CRC is processed the frame is already outbound on the wire. The frame transmission has to be completed but it needs an FCS trailer.

What to FCS value should the switch use?

Remember that the switch still has to calculate the checksum and append the FCS for this transmitted frame. If it calculates a new CRC value for the known-corrupt frame, the frame corruption would be masked and go undetected until it arrives at its destination.

The compromise here is to ‘stomp’ the outbound CRC. Stomping the CRC ensures that the next-hop receiver will correctly identify this frame as having a CRC error. Being honest, I’m not sure how this stomping is actually implemented. You could set all 1s or you could re-use the received CRC as long as you knew the L2 frame hadn’t changed.

Have a look at Cisco Nexus 5000 stomp procedure. If the sending device increments the Stomp and Tx CRC error counters you know that the device knowingly propagated CRC-errored frames. That’s nice, but if you’ve got a large network with a single bad top-of-rack cable then you’d see Tx and Rx CRC’s all across your cut-through switching domain. The behavior on the next-hop cut-through switch would be the same; mark the Rx/Tx/Stomp counters, but still propagate the frame. CRC errors, spreading like the plague!

Troubleshooting cut-through CRC errors

Let’s get this straight. The dodgy links causing the CRC’s will still cause the same level of pain and upset to your customers, and application owners will still observe the impact as IP packet loss. Corrupt frames will be propagated farther than normal within your network but hopefully there aren’t enough errored frames for that to be a bandwidth concern.

No, the problem here is identifying the source of the CRCs. Your monitoring system will now detect CRCs at multiple points in the network for a single noisy-link event. The more ‘truly-errored’ links in your network the harder it will be to trace them back through the network.

Actions and summary

- Be aware that your CRC troubleshooting approach needs to change if you enable cut-through switching.

- Monitor every port. It’s very likely that the problems will originate at the edge of your network. If you see Rx CRC at the edge of your network you’re back to single-link troubleshooting. If you have trouble monitoring all your links, check out StatSeeker which is very well suited to this job. There are some good engineer reviews by the Lone SysAdmin, LameJournal and the NetworkingNerd.

- Treat CRC errors seriously and act fast. CRC’s really hurt your customers so you should already be reacting fast. However you need to know that CRC fault-location becomes much harder in a cut-through environment when there are multiple errored links. So detect and correct noisy links early, or triage and troubleshooting will get harder still.

Other war stories

Here’s an interesting cut-through war-story where the the ether-type was being mangled and thus the dot1q header was not interpreted. This lead to unicast flooding of corrupt frames on the default vlan.

Do you have any war stories? I’d love to hear from you in the comments.

12 thoughts on “Cut-through, corruption and CRC-stomping”

Great post, thanks a lot. I have not run into any major CRC issues with 5Ks yet and thankfully I’ve read this post before that happens.

Thanks Will, it’s great to get feedback and I hope the post helps. This topic certainly confused the heck out of me before I looked deeper.

I’ve always thought that if a frame source and destination MAC addresses do not change (because the frame starts and terminates on a single layer 2 network), that the egress CRC was just passed forward (vs. being stripped off and recalculated). Is this true?

Hi Rob,

Thanks for the question. I’ll be totally honest here and say that I’m not sure. If you’re doing pure L2 switching and the frame contents don’t change, you get away without changing the FCS. If you sent a frame from an access vlan to a tagged vlan on a trunk, the switch would need to recompute the FCS.

Given that switches should be executing this operation in an ASIC, it’s probably less work to recompute every FCS, rather than burning transistors to do the if/then logic in silicon. Just a guess though.

/John H

So, I haven’t been able to find the command on the web (if it even exists), but is there a way to:

A. see what the default switching method is (cut-through or store-and-forward)?

B. change the default switching method

Note: This is in reference to Nexus 7K not 5K for my current setup…

Thanks,

~Steve

Hi Stephen,

I hadn’t messed with cut-through on Nexus 7K either, but according to the NX-OS and Cisco Nexus Switching book … The Nexus 7000 series of switches implements store-and-forward switching.

This is the only reference to store and forward / cut-through for nexus 7k in the book. Given that further reference is made to the 5k cut-through option, I think it’s highly likely that N7K is store-and-forward only. If you wanted 100% confirmation you could always hit up Ron Fuller on twitter.

/John H

per http://www.amazon.co.uk/NX-OS-Cisco-Nexus-Switching-Next-generation/dp/1587143046/

page 60

N7K – implements store-and-forward switching

N5K – implements cut-through switching, except when dissimilar transmission speeds exist between ingress and egress ports.

This was very informative, thank you very much for this post about cut-through switching.

Way to locate source of the problem on Nexus is with command…

show hardware internal bigsur all-ports detail

…NOT_STOMPED errors can then be grepped out

Thanks for the comment Dave.